Metallb结合Ingress的使用

一、Metallb

1.简介

Kubernetes不提供网络负载均衡器的实现(LoadBalancer类型的服务)用于裸机集群。Kubernetes附带的Network LB的实现都是调用各种IaaS平台(GCP,AWS,Azure等)的粘合代码。如果您未在受支持的IaaS平台(GCP,AWS,Azure等)上运行,则LoadBalancers在创建时将无限期保持“待处理”状态。

裸机集群运营商只剩下两个较小的工具,即“ NodePort”和“ externalIPs”服务,可将用户流量引入其集群。这两个选项在生产用途上都有很大的缺点,这使裸金属集群成为Kubernetes生态系统中的二等公民。

MetalLB旨在通过提供与标准网络设备集成的Network LB实现来解决这种不平衡问题,从而使裸机群集上的外部服务也尽可能“正常运行”。

2.部署安装

2.1环境准备

- 设置kube-proxy模式为为ipvs

kubectl edit configmap -n kube-system kube-proxy

## 设置kube-proxy模式为为ipvs

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

ipvs:

strictARP: true- 设置kube-proxy开启ARP

# see what changes would be made, returns nonzero returncode if different

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

kubectl diff -f - -n kube-system

# actually apply the changes, returns nonzero returncode on errors only

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

kubectl apply -f - -n kube-system2.2 安装Manifest

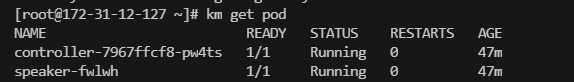

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.9/config/manifests/metallb-native.yaml这将把MetalLB部署到您的集群,在MetalLB -system命名空间下。清单中的组件是:

metallb-system/controller部署。这是处理IP地址分配的集群范围的控制器。metallb-system/speaker守护进程。这是说出您所选择的协议以使服务可访问的组件。

2.3 使用helm安装metallb

helm repo add metallb https://metallb.github.io/metallb

helm install metallb metallb/metallb3.Metallb使用

3.1配置IP池

- 旧版本采用configMap配置metallb的ip池

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.1.100-172.25.1.200- 新版本采用crd管理

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: zstack-edge

namespace: metallb-system

spec:

addresses:

- 172.31.12.116/32

autoAssign: true

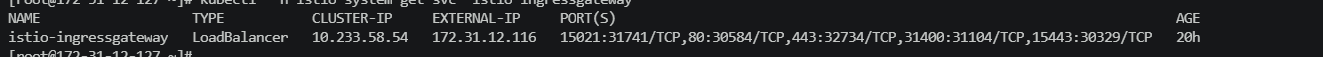

avoidBuggyIPs: false3.2配置LoadBalance的Service

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"istio-ingressgateway","install.operator.istio.io/owning-resource":"unknown","install.operator.istio.io/owning-resource-namespace":"istio-system","istio":"ingressgateway","istio.io/rev":"default","operator.istio.io/component":"IngressGateways","operator.istio.io/managed":"Reconcile","operator.istio.io/version":"1.17.2","release":"istio"},"name":"istio-ingressgateway","namespace":"istio-system"},"spec":{"ports":[{"name":"status-port","port":15021,"protocol":"TCP","targetPort":15021},{"name":"http2","port":80,"protocol":"TCP","targetPort":8080},{"name":"https","port":443,"protocol":"TCP","targetPort":8443},{"name":"tcp","port":31400,"protocol":"TCP","targetPort":31400},{"name":"tls","port":15443,"protocol":"TCP","targetPort":15443}],"selector":{"app":"istio-ingressgateway","istio":"ingressgateway"},"type":"LoadBalancer"}}

metallb.universe.tf/ip-allocated-from-pool: zstack-edge

creationTimestamp: "2023-05-30T07:20:36Z"

labels:

app: istio-ingressgateway

install.operator.istio.io/owning-resource: unknown

install.operator.istio.io/owning-resource-namespace: istio-system

istio: ingressgateway

istio.io/rev: default

operator.istio.io/component: IngressGateways

operator.istio.io/managed: Reconcile

operator.istio.io/version: 1.17.2

release: istio

name: istio-ingressgateway

namespace: istio-system

resourceVersion: "2916811"

uid: ada82838-73e2-486c-a83d-31309f4561f5

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.233.58.54

clusterIPs:

- 10.233.58.54

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: status-port

nodePort: 31741

port: 15021

protocol: TCP

targetPort: 15021

- name: http2

nodePort: 30584

port: 80

protocol: TCP

targetPort: 8080

- name: https

nodePort: 32734

port: 443

protocol: TCP

targetPort: 8443

- name: tcp

nodePort: 31104

port: 31400

protocol: TCP

targetPort: 31400

- name: tls

nodePort: 30329

port: 15443

protocol: TCP

targetPort: 15443

selector:

app: istio-ingressgateway

istio: ingressgateway

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 172.31.12.116

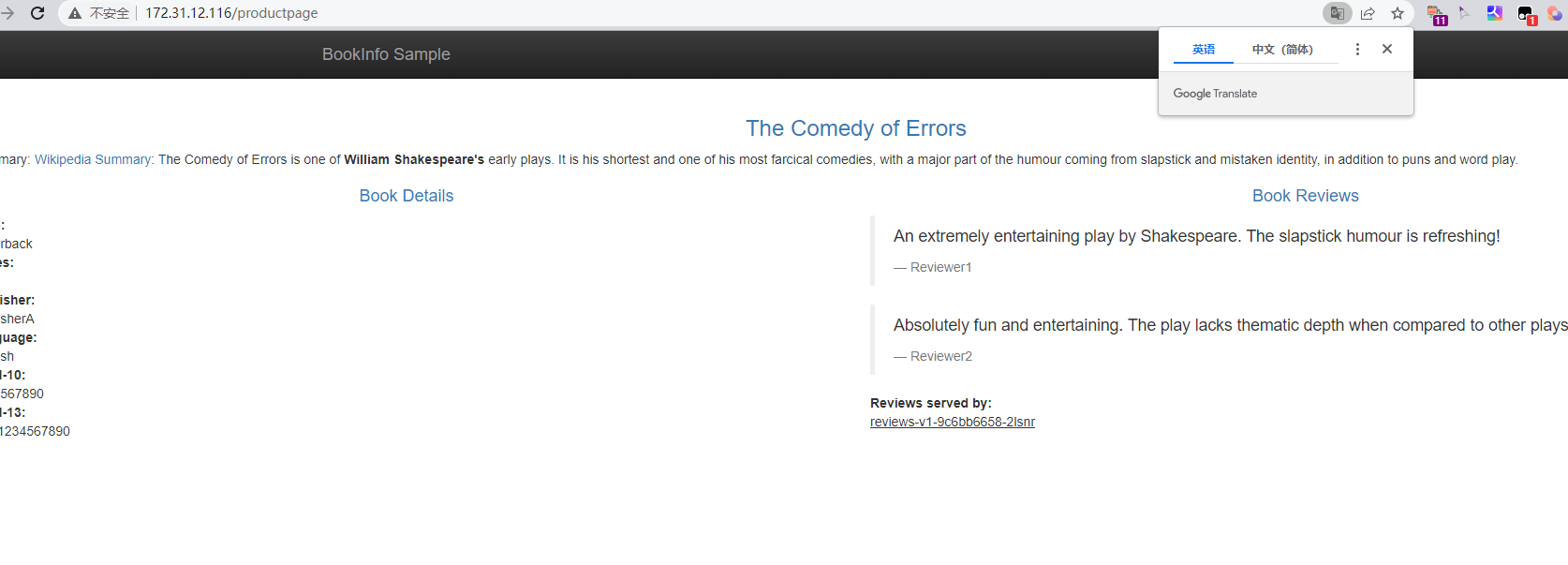

二、Ingress

1.简介

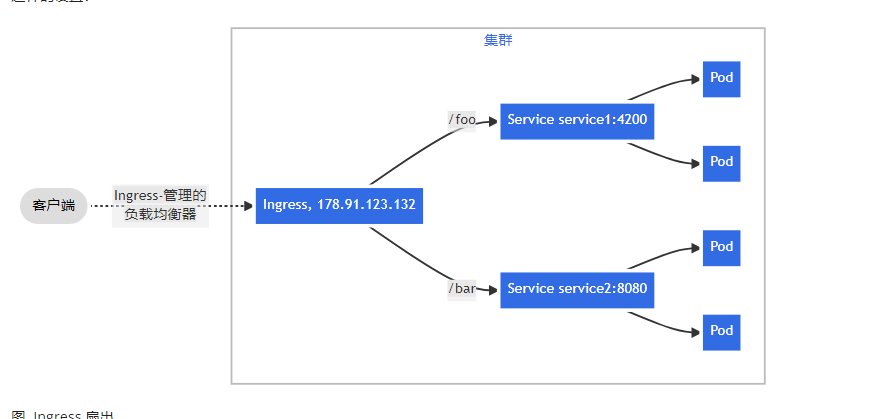

Ingress 公开从集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源上定义的规则控制。

Ingress 可为 Service 提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及基于名称的虚拟托管。 Ingress 控制器 通常负责通过负载均衡器来实现 Ingress,尽管它也可以配置边缘路由器或其他前端来帮助处理流量。

Ingress 不会公开任意端口或协议。 将 HTTP 和 HTTPS 以外的服务公开到 Internet 时,通常使用 Service.Type=NodePort 或 Service.Type=LoadBalancer 类型的 Service

下面是一个将所有流量都发送到同一 Service 的简单 Ingress 示例:

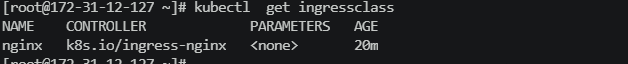

2.部署安装

2.1环境准备

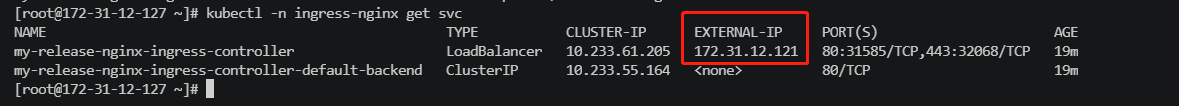

- 安装ingress-nginx的controller控制器

$ helm repo add bitnami https://charts.bitnami.com/bitnami

$ helm install my-release bitnami/nginx-ingress-controller

3.Ingress使用

- Ingress 中的spec字段是Ingress资源的核心组成部分,主要包含以下3个字段:

- rules:用于定义当前Ingress资源的转发规则列表;由rules定义规则,或没有匹配到规则时,所有的流量会转发到由backend定义的默认后端。

- backend:默认的后端,用于服务那些没有匹配到任何规则的请求;定义Ingress资源时,必须要定义backend或rules两者之一,该字段用于让负载均衡器指定一个全局默认的后端。

- tls:TLS配置,目前仅支持通过默认端口443提供服务,如果要配置指定的列表成员指向不同的主机,则需要通过SNI TLS扩展机制来支持该功能。

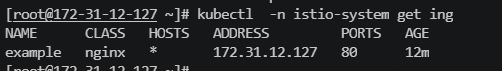

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: istio-system

spec:

ingressClassName: nginx

rules:

- http:

paths:

- backend:

service:

name: kiali

port:

number: 20001

path: /kiali

pathType: Prefix

- 访问地址

- example:

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: ingress-nginx

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- backend:

service:

name: example-service

port:

number: 80

path: /

pathType: Prefix

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: ingress-nginx

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls