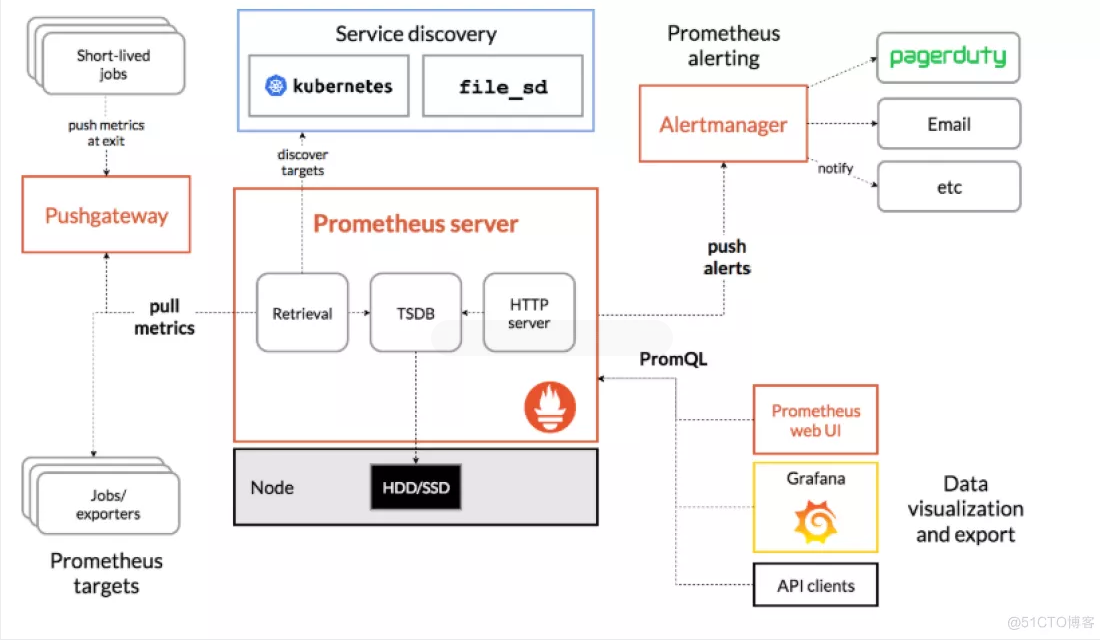

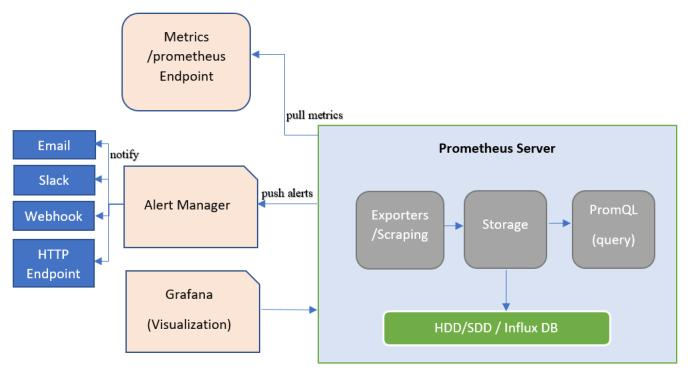

prometheus系统架构图

手动试验:

1.部署一个简单的webhook服务

apiVersion: apps/v1

kind: Deployment

metadata:

name: alert-webhook

namespace: default

labels:

app: alert-webhook

spec:

selector:

matchLabels:

app: alert-webhook

replicas: 1

template:

metadata:

labels:

app: alert-webhook

spec:

containers:

- name: alert-webhook

image: 172.32.1.175:10043/demo/alert-webhook:1.0

ports:

- containerPort: 8080

name: alert-webhook

imagePullSecrets:

- name: secret-wangsu

---

apiVersion: v1

kind: Service

metadata:

name: alert-webhook

namespace: default

spec:

selector:

app: alert-webhook

type: NodePort

ports:

- name: alert-webhook

protocol: TCP

port: 8080

targetPort: 8080

- 调整 kubectl -n monitoring get secrets 路径下的alertmanager.yaml配置文件

参考模板:

global:

resolve_timeout: '5m'

smtp_smarthost: 'smtp.126.com:25'

smtp_from: 'looknicemm@126.com'

smtp_auth_username: 'looknicemm@126.com'

smtp_auth_password: 'KNPYYGBKYJKKOQQA' #邮箱的授权密码 (如果是163邮箱,需要在设置->常规设置->点击左侧的客户端授权密码->开启授权密码)

templates:

- '*.tmpl' #加载所有消息通知模板

inhibit_rules:

- equal: # 如果告警出现critical 那么在相同的namespace和alertname下的 warning|info级别的告警都会被抑制

- namespace

- alertname

source_matchers:

- severity = critical

target_matchers:

- severity =~ warning|info

- equal: # 确保这个配置下的标签内容相同才会抑制,也就是说警报中必须有这三个标签值才会被抑制。

- namespace

- alertname

source_matchers:

- severity = warning

target_matchers:

- severity = info

route:

receiver: 'default-receiver'

group_by: ['alertname','namespace'] #将传入警报分组在一起的标签。例如,cluster=A和alertname=LatencyHigh的多个警报将批处理为单个组。

group_wait: 30s #当传入的警报创建新的警报组时,至少等待"30s"发送初始通知。

group_interval: 5m #当发送第一个通知时,等待"5m"发送一批新的警报,这些警报开始针对该组触发。 (如果是group_by里的内容为新的如:alertname=1,alertname=2 会马上发送2封邮件, 如果是group_by之外的会等待5m触发一次)

repeat_interval: 4h #如果警报已成功发送,请等待"4h"重新发送,重复发送邮件的时间间隔

routes: #所有与下列子路由不匹配的警报将保留在根节点,并被分派到'default-receiver'。

- receiver: 'database-pager'

group_wait: 10s

group_by: [service]

match_re:

service: mysql|cassandra #带有service=mysql或service=cassandra的所有警报都被发送到'database-pager'

receivers:

- name: default-receiver #不同的报警 发送给不同的邮箱

webhook_configs:

- url: http://alert-webhook.default:8080/webhook

send_resolved: true # 恢复通知提醒

- name: database-pager

email_configs:

- to: '690081650@qq.com'

html: '{{ template "alertemp.html" . }}' #应用哪个模板

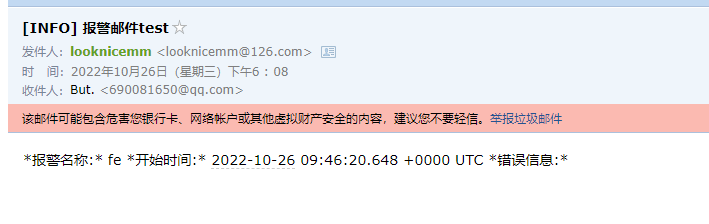

headers: { Subject: "[INFO] 报警邮件test" } #邮件头信息

删除pod 查看zstack-prometheus-alertmanager svc对应下的status看是否已经修改成功 http://172.31.12.6:32140/#/alerts

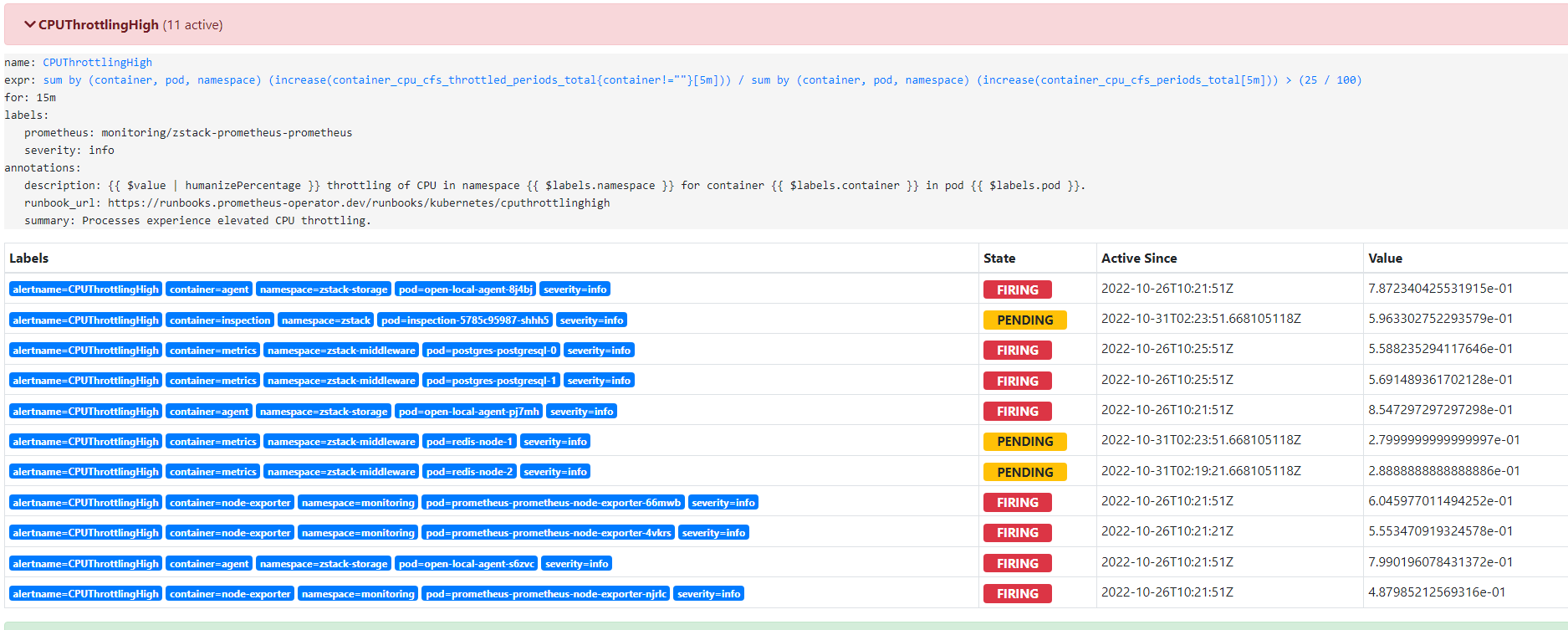

收到相关的告警信息

Alatermanager:访问webhook的访问数据

{

"version": "4",

"groupKey": "{}:{alertname=\"CPUThrottlingHigh\", namespace=\"zstack-middleware\"}",

"status": "firing",

"receiver": "default-receiver",

"groupLabels": {

"alertname": "CPUThrottlingHigh",

"namespace": "zstack-middleware"

},

"commonLabels": {

"alertname": "CPUThrottlingHigh",

"container": "metrics",

"namespace": "zstack-middleware",

"prometheus": "monitoring/zstack-prometheus-prometheus",

"severity": "info"

},

"commonAnnotations": {

"runbook_url": "https://github.com/kubernetes-monitoring/kubernetes-mixin/tree/master/runbook.md#alert-name-cputhrottlinghigh",

"summary": "Processes experience elevated CPU throttling."

},

"externalURL": "http://zstack-prometheus-alertmanager.monitoring:9093",

"alerts": [

{

"labels": {

"alertname": "CPUThrottlingHigh",

"container": "metrics",

"namespace": "zstack-middleware",

"pod": "postgres-postgresql-0",

"prometheus": "monitoring/zstack-prometheus-prometheus",

"severity": "info"

},

"annotations": {

"description": "36.43% throttling of CPU in namespace zstack-middleware for container metrics in pod postgres-postgresql-0.",

"runbook_url": "https://github.com/kubernetes-monitoring/kubernetes-mixin/tree/master/runbook.md#alert-name-cputhrottlinghigh",

"summary": "Processes experience elevated CPU throttling."

},

"startsAt": "2022-10-26T06:44:50.751Z",

"endsAt": "0001-01-01T00:00:00Z"

},

{

"labels": {

"alertname": "CPUThrottlingHigh",

"container": "metrics",

"namespace": "zstack-middleware",

"pod": "postgres-postgresql-1",

"prometheus": "monitoring/zstack-prometheus-prometheus",

"severity": "info"

},

"annotations": {

"description": "36.28% throttling of CPU in namespace zstack-middleware for container metrics in pod postgres-postgresql-1.",

"runbook_url": "https://github.com/kubernetes-monitoring/kubernetes-mixin/tree/master/runbook.md#alert-name-cputhrottlinghigh",

"summary": "Processes experience elevated CPU throttling."

},

"startsAt": "2022-10-25T21:18:20.751Z",

"endsAt": "0001-01-01T00:00:00Z"

}

]

}

自定义报警规则:

1、参考prometheus规则(包含大部分通用规则):https://awesome-prometheus-alerts.grep.to/

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

custom-alerting-rule-level: cluster

role: alert-rules

name: custom-alerting-rule

namespace: monitoring

spec:

groups:

- name: alerting.custom.defaults.0

rules:

- alert: fe

annotations:

kind: Node

message: ""

resources: '["172-20-17-188"]'

rule_update_time: "2022-10-09T10:14:48Z"

rules: '[{"_metricType":"instance:node_cpu_utilisation:rate5m{$1}","condition_type":"<","thresholds":"90","unit":"%"}]'

summary: 节点 172.32.1.174 CPU 用量 < 90%

expr: instance:node_cpu_utilisation:rate5m{ instance=~"172.32.1.174.*|172.32.1.179.*"} <0.9

for: 1m

labels:

alerttype: metric

severity: warning

service: mysql

2、 基于工作负载的某项指标进行检测

node_namespace_pod_container:container_cpu_usage_seconds_total:sum_rate * ON(pod) GROUP_LEFT() namespace_workload_pod:kube_pod_owner:relabel{ workload_type="deployment",workload=~"alert-webhook|asd"} >0

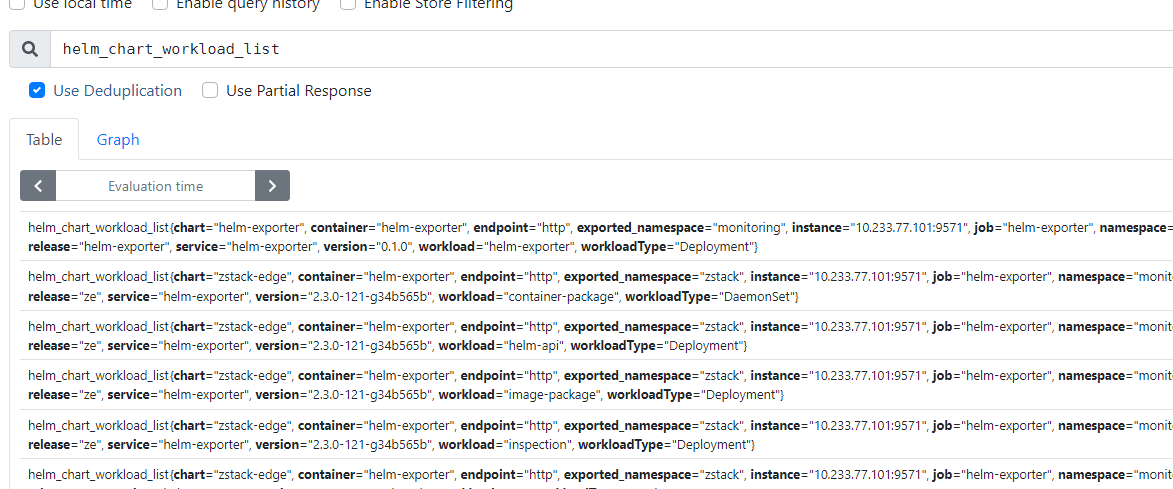

workload_type:取值为: deployment,statefulset,daemonset3、基于helm应用的所有工作负载(sts,deploy,ds)的某项指标检测 —– 需要补充一个自己开发helm-exporter采集器

node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate * ON(pod) GROUP_LEFT() (namespace_workload_pod:kube_pod_owner:relabel{} * on(workload,workload_type) GROUP_LEFT() helm_chart_workload_list{chart=~"helm-exporter|zstack-edge"})

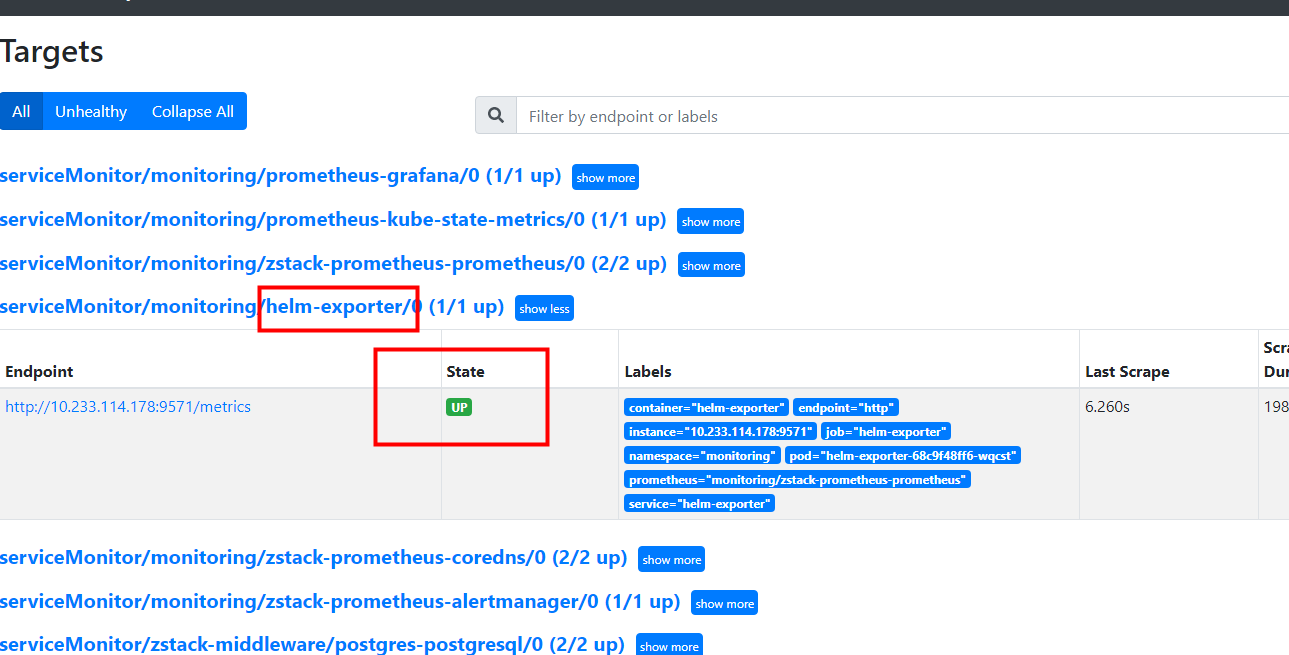

自定义采集器:

1.基于client-golang的SDK工具包进行exporter采集器开发参考Demo:https://github.com/prometheus/client_golang/blob/main/examples/gocollector/main.go

2.配置prometheus拉取采集信息servicemonitors (最终会写到prometheus中/etc/prometheus/config_out/prometheus.env.yaml的配置文件中)

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

annotations:

meta.helm.sh/release-name: prometheus

meta.helm.sh/release-namespace: monitoring

labels:

app.kubernetes.io/instance: helm-exporter

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: helm-exporter

app.kubernetes.io/version: 1.2.3

helm.sh/chart: helm-exporter-1.2.3_4dc0dfc

name: helm-exporter

namespace: monitoring

spec:

endpoints:

- path: /metrics

port: http

namespaceSelector:

matchNames:

- monitoring

selector:

matchLabels:

app.kubernetes.io/name: helm-exporter

设计思路:

整个报警链路完成:

- 首先是Prometheus根据告警规则告警,如果增删改规则参考PrometheusRule(https://blog.csdn.net/wzy_168/article/details/103290235)

- Prometheus的告警经过Alertmanager静默、抑制、分组等配置到达Alertmanager

- AlterManager通过配置webhook,地址填Edge自定义转换器的地址。

- Edge自定义转换器中webhook地址去调用其他通知渠道(如:邮箱、钉钉、微信…..)。

词语解释:

1. alert State:

Inactive 警报未激活;

Pending:警报已满足测试表达式条件,但未达到for指定的持续时间;

Firing:警报满足测试表达式条件,且持续时间达到了for指定的持续时间

参考链接:

- https://blog.csdn.net/wzy_168/article/details/103295971 prometheus-operator使用【了解Alertmanager通知机制】

- https://yunlzheng.gitbook.io/prometheus-book/parti-prometheus-ji-chu/alert/alert-manager-route 基于标签的告警处理路由

- https://github.com/prometheus/client_golang prometheus开发sdk包,支持自定义采集器开发

- https://github.com/sstarcher/helm-exporter 应用采集器包,可以进行二次开发

- https://prometheus.io/docs/prometheus/latest/configuration/configuration/ 官网配置字段说明

- https://prometheus.io/docs/prometheus/latest/configuration/alerting_rules/ 官网alert rules 字段说明